Check out our latest products

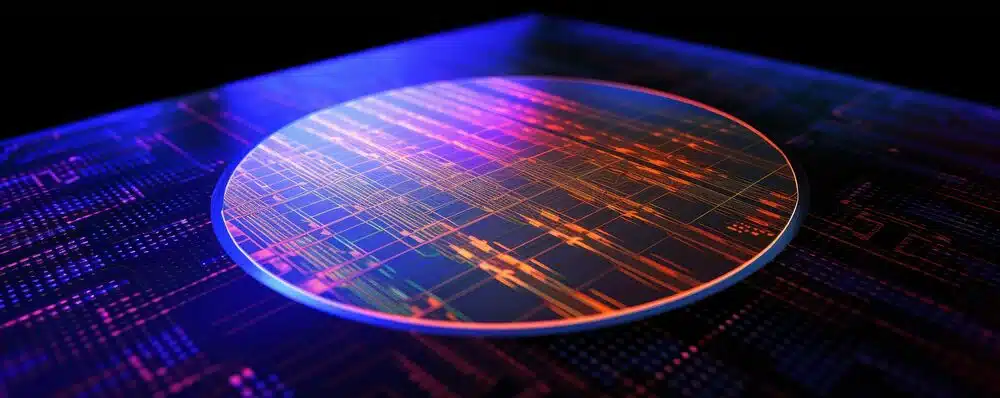

Today’s most advanced computer chips are a mere few dozen nanometers in size. While powerful chips, including those from NVIDIA and TSMC, continue down the miniaturization path, Cerebras is bucking that trend and going big with a wafer-scale chip that packs trillions of transistors. The chip’s considerable increase in size is matched by its significant increase in speed.

Wafer-scale technology is moving to the fore in artificial intelligence (AI) applications like training, running large language models (LLMs), and simulating molecules. And this latest advancement by Cerebras is outperforming the world’s top supercomputer.

Nvidia’s rapid growth was based on its timely focus on AI and the advanced packaging and manufacturing expertise of the Taiwan Semiconductor Manufacturing Company (TSMC) and South Korea’s SK Hynix. While supercomputers model materials with up to trillions of atoms with high precision, the process can sometimes be slow. The challenge is to break through Moore’s Law with faster and more scalable solutions—and trillion-transistor wafer-scale chips are gaining traction. This blog looks at just how that is happening.

Wafer-Scale Chips

A wafer-scale chip is a gigantic circuit residing on an entire wafer. Integrated circuits (ICs) typically involve cutting apart thousands of transistors from wafers and soldering them together on circuit boards. In addition, chipmakers increase logic density on processors by scaling down the space used and limiting the size of interconnects. In comparison, the wafer-scale integration approach bypasses cutting apart the chip, while advanced packaging technology is offsetting the logic density challenge.

Cerebras recently announced the CS-3, its third-generation wafer-scale AI accelerator made for training advanced AI models.[1] The CS-3 offers speeds two times faster than its predecessor, thanks to its more than 4 trillion transistors, which is 57 times more than the largest GPU. Even as it doubles the speed of the previous version, the CS-3 uses the same amount of power. Furthermore, the CS-3 meets scalability needs by integrating interconnect fabric technology known as SwarmX, allowing up to 2,048 CS-3 systems to be linked together and construct AI supercomputers of up to a quarter of a zettaflops (1021). With its ability to train models of up to 24 trillion parameters, a single system enables machine learning (ML) researchers to build models ten times larger than GPT-4 and Claude.

Moreover, Cerebras’ latest wafer-scale engine, WSE-3, is the third generation of the supercomputing company’s platform (Figure 1). Unlike traditional devices with tiny cache memory, the WSE-3 takes 44GB of superfast on-chip SRAM and spreads it evenly across the chip’s surface. This gives each core single-clock-cycle access to fast memory at extremely high bandwidth, 880 times more capacity, and 7,000 times greater bandwidth than the leading GPU.

The WSE-3 on-wafer interconnect eliminates communication slowdown and the inefficiencies of connecting hundreds of small devices via wires and cables. Lastly, it delivers more than 3,715 times the bandwidth delivered between graphics processors.

The Wafer-Scale Advantage

The Cerebras WSE-3 surpasses other processors in AI-optimized cores, memory speed, and on-chip fabric bandwidth.

In a recent keynote given at Applied Machine Learning Days (AMLD), Cerebras Chief System Architect Jean-Philippe Fricker discussed the unsatiable demand for AI chips and the need to surpass Moore’s Law.[2] Unlike the conventional methods that come with complex communication challenges, Cerebras uses a single uncut wafer with approximately 4 trillion transistors on it. In essence, it is one single processor with 900,000 cores.

This approach assigns a single simulated atom to each processor, allowing rapid information exchanges about position, motion, and energy. The WSE-3, coming three years after the WSE-2 launch in 2021, doubled the performance window for tantalum lattices in simulations.

Supporting wafer-scale technology, Cerebras collaborates with TSMC to create its wafer-size AI accelerators based on a 5-nanometer process. Its 21-petabytes per second of memory bandwidth exceeds anything available. The single unit enables engineers to swiftly program in Python using just 565 lines of code. Programming that once took three hours is now finished in five minutes.

Tri-Labs Partnership

Cerebras also collaborates with Sandia, Lawrence Livermore, and Los Alamos National Laboratories, collectively known as Tri-Labs, to deliver wafer-scale technology with the speed and scalability their AI applications require.

In 2022, Sandia National Laboratory began using Cerebras’ engine to accelerate simulations, achieving a 179-fold speedup over the Frontier supercomputer. The technology enabled simulations of materials like tantalum for fusion reactors, completing a year’s work in just days.

Wafer-Scale Applications

Materials drive technology, consistently breaking through heat resistance or strength barriers. Long timescale simulations based on wafer-scale technology allow scientists to explore phenomena across many domains. For example, materials scientists can study the long-term behavior of complex materials, such as the evolution of grain boundaries in metals, to develop more robust, more resilient materials.[3]

Pharmaceutical researchers will be able to simulate protein folding and drug-target interactions, accelerating life-saving therapies, and the renewable energy industry can optimize catalytic reactions and design more efficient energy storage systems by simulating atomic-scale processes over an extended duration.

Cerebras solutions were successfully used in the following cases:

- Cerebras and G42 trained a world-leading Arabic LLM, which was adopted by Microsoft as a core LLM offering in the Middle East and is now available on Azure.[4]

- Cerebras helped the Mayo Clinic to develop and enhance health care tools that rely on an LLM.

- Cerebras assisted a company in the energy sector with solving problems that required greater speed and achieved an increase of two orders of magnitude.

- Kaust used Cerebras to run workloads and found that the solution outperformed the fastest supercomputer in the world.

Future of Wafer-Scale Technology

While the latest AI models show exponential growth, the energy and cost of training and running them has exploded. However, performance is on the rise, too, with advancements such as the wafer-scale technology from Cerebras bringing unprecedented speed, scalability, and efficiency, signaling the future of AI computing.

Cerebras may be a niche player, with more conventional chips still controlling the AI chip market, but today’s wafer-scale chips’ speed, efficiency, and scalability hint at a future where chip size and performance is anything but conventional.

Carolyn Mathas is a freelance writer/site editor for United Business Media’s EDN and EE Times, IHS 360, and AspenCore, as well as individual companies. Mathas was Director of Marketing for Securealink and Micrium, Inc., and provided public relations, marketing and writing services to Philips, Altera, Boulder Creek Engineering and Lucent Technologies. She holds an MBA from New York Institute of Technology and a BS in Marketing from University of Phoenix.

![[5G & 2.4G] Indoor/Outdoor Security Camera for Home, Baby/Elder/Dog/Pet Camera with Phone App, Wi-Fi Camera w/Spotlight, Color Night Vision, 2-Way Audio, 24/7, SD/Cloud Storage, Work w/Alexa, 2Pack](https://m.media-amazon.com/images/I/71gzKbvCrrL._AC_SL1500_.jpg)

![[3 Pack] Sport Bands Compatible with Fitbit Charge 5 Bands Women Men, Adjustable Soft Silicone Charge 5 Wristband Strap for Fitbit Charge 5, Large](https://m.media-amazon.com/images/I/61Tqj4Sz2rL._AC_SL1500_.jpg)