Check out our latest products

By leveraging world models and transformers, it bridges the gap between human-like adaptability and artificial intelligence, paving the way for fairer, more efficient algorithms.

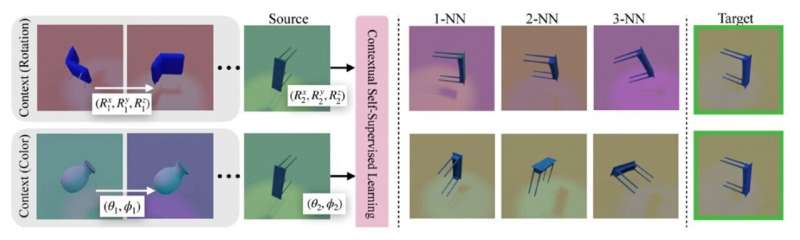

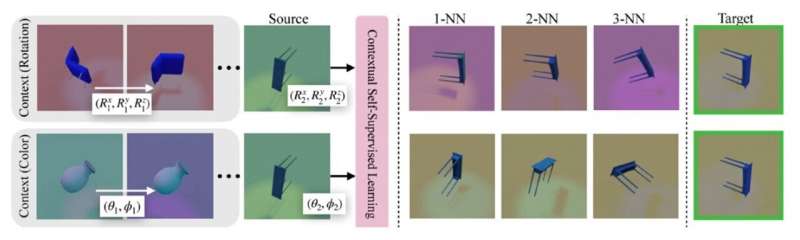

Self-supervised learning (SSL), a rising paradigm in machine learning, is reshaping how models learn without labeled data. SSL methods traditionally rely on pre-defined data augmentations, enforcing invariance or equivariance to specific transformations. However, these inductive priors often limit flexibility across diverse tasks.

An approach called Contextual Self-Supervised Learning (ContextSSL), developed by researchers at MIT’s CSAIL and the Technical University of Munich, addresses these limitations. ContextSSL introduces a mechanism where representations dynamically adapt based on task-specific contexts, removing the need for repetitive retraining. At its core, ContextSSL incorporates world models—abstract representations of an agent’s environment—and uses a transformer module to encode these as sequences of state-action-next-state triplets. By attending to context, the model determines when to enforce invariance or equivariance, depending on the task requirements.

Through extensive testing on benchmarks like 3DIEBench and CIFAR-10, ContextSSL demonstrated remarkable versatility. For example, in the medical domain, using the MIMIC-III dataset, ContextSSL adapted its representation to gender-specific medical diagnosis tasks, where equivariance was critical. Simultaneously, it ensured fairness in predicting outcomes like hospital length of stay, emphasizing invariance.

This adaptability ensures better performance across metrics like equalized odds (EO) and equality of opportunity (EOPP), while also enhancing prediction accuracy for sensitive attributes like gender. By leveraging transformer models for in-context learning, ContextSSL effectively balances invariance and equivariance in a task-specific manner. This is a significant step toward creating more flexible, general-purpose SSL frameworks. With ContextSSL, the future of machine learning promises more efficient and adaptive algorithms, bridging gaps between human-like reasoning and artificial intelligence.

“Rather than fine-tuning models for each task, we aim to create a general-purpose system capable of adapting to various environments, similar to human learning,” says Sharut Gupta, CSAIL Ph.D. student and lead author of the study.

![[5G & 2.4G] Indoor/Outdoor Security Camera for Home, Baby/Elder/Dog/Pet Camera with Phone App, Wi-Fi Camera w/Spotlight, Color Night Vision, 2-Way Audio, 24/7, SD/Cloud Storage, Work w/Alexa, 2Pack](https://m.media-amazon.com/images/I/71gzKbvCrrL._AC_SL1500_.jpg)

![[3 Pack] Sport Bands Compatible with Fitbit Charge 5 Bands Women Men, Adjustable Soft Silicone Charge 5 Wristband Strap for Fitbit Charge 5, Large](https://m.media-amazon.com/images/I/61Tqj4Sz2rL._AC_SL1500_.jpg)