Check out our latest products

Combining artificial intelligence with audio and haptic feedback, is helping blind and partially sighted users navigate obstacles more safely and independently.

A recent study published in Nature Machine Intelligence introduces a wearable system designed to support navigation for blind and partially sighted individuals. This advanced system employs artificial intelligence (AI) to analyze the environment and alert users when obstacles or objects are nearby, enhancing their ability to move around safely and independently.

Wearable electronic visual assistance devices represent a promising alternative to medical treatments and implanted prostheses for those with visual impairments. These systems typically work by transforming visual data from the surrounding environment into other sensory signals, such as sound or touch, allowing users to better interpret their surroundings and complete everyday tasks. Despite their potential, many existing devices are often difficult to use, limiting their acceptance and widespread adoption.

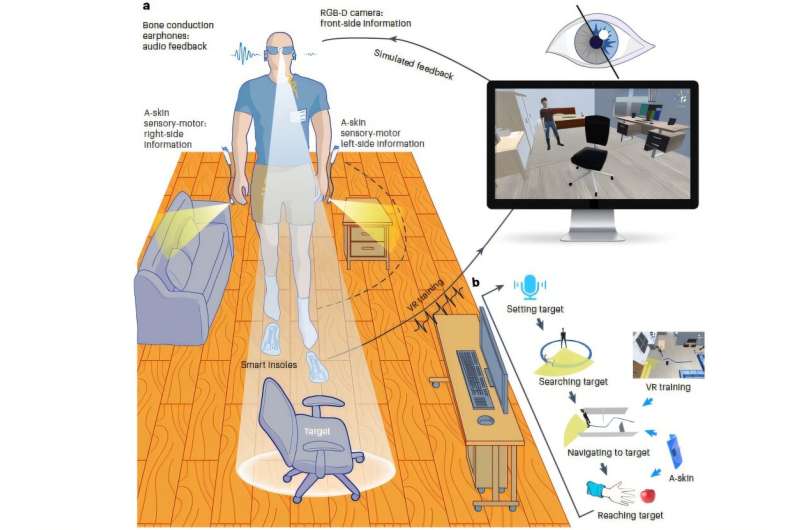

In this study, Leilei Gu and colleagues present a newly developed wearable visual assistance system that delivers navigational guidance through voice commands. The system’s core is an AI-powered algorithm that processes real-time video captured by an onboard camera to identify a safe, obstacle-free route for the user.

To ensure that users receive clear and intuitive feedback, the system communicates environmental information through two channels. First, bone conduction headphones deliver voice alerts about nearby obstacles and directional cues, leaving the ears open to ambient sounds for safety. Second, the researchers designed stretchable artificial “skins” worn on the wrists, which produce vibration signals that guide the user’s movement and help avoid obstacles located at the sides.

The system was rigorously tested using both humanoid robots and blind or partially sighted human participants in virtual and real-world navigation scenarios. Participants demonstrated marked improvements in their ability to navigate complex environments, avoid obstacles, and perform tasks like reaching for and grasping objects after navigating a maze.

These findings highlight the potential of combining visual, audio, and haptic feedback to improve the usability and effectiveness of wearable assistance systems. Looking ahead, the researchers suggest further refining the technology and expanding its use across other areas of assistive technology, where similar sensory integration could provide significant benefits for individuals with disabilities.

![[5G & 2.4G] Indoor/Outdoor Security Camera for Home, Baby/Elder/Dog/Pet Camera with Phone App, Wi-Fi Camera w/Spotlight, Color Night Vision, 2-Way Audio, 24/7, SD/Cloud Storage, Work w/Alexa, 2Pack](https://m.media-amazon.com/images/I/71gzKbvCrrL._AC_SL1500_.jpg)

![[3 Pack] Sport Bands Compatible with Fitbit Charge 5 Bands Women Men, Adjustable Soft Silicone Charge 5 Wristband Strap for Fitbit Charge 5, Large](https://m.media-amazon.com/images/I/61Tqj4Sz2rL._AC_SL1500_.jpg)