Check out our latest products

The system helps robots focus on what matters, using sounds and images to guess human goals and offer the right help quickly and safely.

Robots find the real world hard to understand. MIT made a system called “Relevance” to help robots focus on what matters. It uses sounds and images to guess what a person wants and quickly offers help.

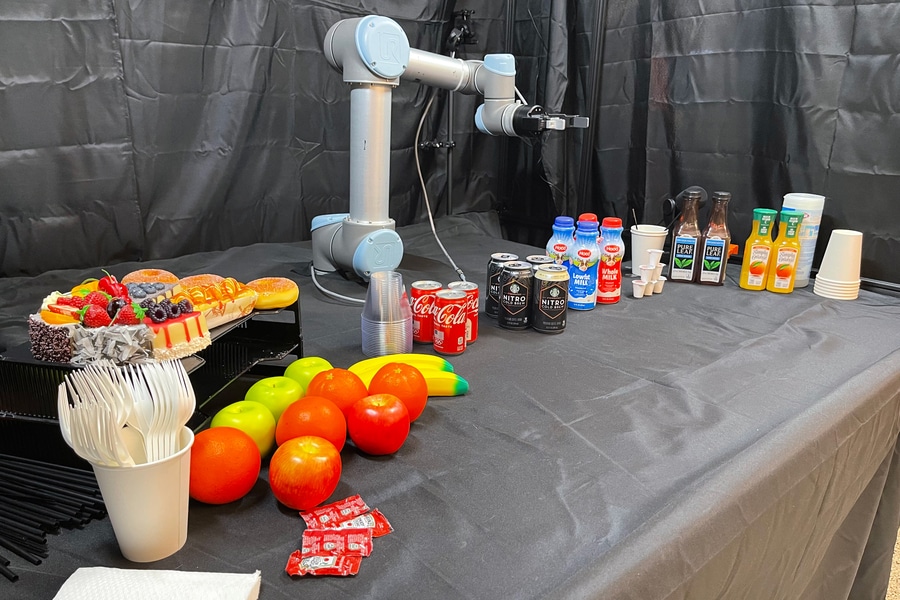

The team tested it with a breakfast buffet. The robot, using a camera and mic, handed people coffee items by watching and listening. It guessed goals 90% right, picked the right objects 96% of the time, and had 60% fewer accidents.

Finding focus

The team built a robot that works like the brain’s RAS, helping it focus on important things and ignore the rest. First, the robot watches and listens using a camera and microphone, sending what it sees and hears to an AI that finds people, objects, actions, and key words. Second, it checks if anything important is happening, like a person entering the room. If it sees a person, the third step starts: the robot figures out what the person wants and finds the right objects to help, like picking cups and creamers instead of fruits and snacks.

Helper mode

The researchers tested their new system with experiments that simulated a conference breakfast buffet. They chose this setup because of the publicly available Breakfast Actions Dataset, which includes videos and images showing common breakfast activities like making coffee, cooking pancakes, making cereal, and frying eggs. Each video and image is labeled with the specific action and the overall goal, such as “making coffee” or “frying eggs.”

Using this dataset, the team trained and tested different algorithms in their AI toolkit to recognize human actions, figure out the person’s goal, and identify the objects needed to help. In their experiments, they set up a robotic arm and gripper, then asked the robot to assist people as they approached a table filled with drinks, snacks, and tableware. When no one was around, the robot’s AI quietly worked in the background, labeling and classifying objects on the table. But when a human was detected, the robot immediately switched to its Relevance phase, quickly finding the objects that would best help the person based on what the AI predicted their goal to be.

![[5G & 2.4G] Indoor/Outdoor Security Camera for Home, Baby/Elder/Dog/Pet Camera with Phone App, Wi-Fi Camera w/Spotlight, Color Night Vision, 2-Way Audio, 24/7, SD/Cloud Storage, Work w/Alexa, 2Pack](https://m.media-amazon.com/images/I/71gzKbvCrrL._AC_SL1500_.jpg)

![[3 Pack] Sport Bands Compatible with Fitbit Charge 5 Bands Women Men, Adjustable Soft Silicone Charge 5 Wristband Strap for Fitbit Charge 5, Large](https://m.media-amazon.com/images/I/61Tqj4Sz2rL._AC_SL1500_.jpg)